Introduction

Ah, the magic of bringing characters to life in three dimensions! If you’ve ever been mesmerized by the fluid movements of characters in films like Toy Story, the lifelike avatars in your favorite video games, or even the intricate animations in VR experiences, you’ve witnessed the art of 3D character animation. But what exactly goes into this enchanting process?

3D character animation is the art of creating lifelike characters and making them move and interact in a virtual 3D space. It’s a blend of artistry and technology, requiring a deep understanding of anatomy, motion, and storytelling, along with a good grasp of complex software.

Importance in Various Industries

From Hollywood blockbusters to cutting-edge virtual reality experiences, 3D character animation is everywhere. In the film industry, studios like Pixar and DreamWorks use advanced animation techniques to create unforgettable movies that tug at our heartstrings. In gaming, companies like Naughty Dog and Blizzard Entertainment bring characters to life in immersive worlds that keep us glued to our screens for hours. Advertising agencies use 3D animation to create compelling and memorable ads that capture consumer attention. Even in virtual reality, realistic avatars and characters enhance the sense of immersion, making experiences more engaging and lifelike.

Evolution of 3D Character Animation Over the Years

The journey of 3D character animation is a fascinating one. It started with humble beginnings, where early attempts at 3D animation involved basic wireframe models and rudimentary movements. But oh, how far we’ve come! The introduction of keyframe animation in the 1980s was a game-changer, allowing animators to define critical points of motion and let the computer interpolate the frames in between. This was followed by breakthroughs in rigging and skinning, which enabled more complex and realistic movements.

In the 1990s, the release of movies like Jurassic Park showcased the potential of CGI and 3D animation, pushing the boundaries of what was possible. The advent of sophisticated software like Autodesk Maya and Blender made these techniques more accessible to artists worldwide. And let’s not forget the impact of motion capture technology, which revolutionized the way we animate human movements by capturing real-life performances and translating them into digital characters.

Today, with the rise of real-time rendering engines like Unreal Engine, 3D character animation continues to evolve, offering new possibilities for interactive storytelling and immersive experiences. The future looks even brighter with the integration of AI and machine learning, promising to make the animation process faster and more intuitive.

Fundamentals of 3D Character Animation

So, what exactly is 3D character animation? At its core, it’s the process of creating moving, talking, and emoting characters in a three-dimensional space. It’s a blend of artistry and technical prowess, where animators breathe life into digital models through meticulous planning and execution. But let’s break down the key components that make this magic happen.

Keyframes: Think of keyframes as the backbone of 3D animation. These are specific frames where the animator sets important positions or poses of a character. The software then interpolates the frames between these key poses to create smooth motion. Imagine creating a bouncing ball animation: you’d set a keyframe at the top of the bounce and another at the bottom. The software does the heavy lifting, filling in the rest. Learn more about keyframe animation.

Rigging: Before you can animate, you need a rig. Rigging is like building a skeleton for your character, complete with joints and bones that control its movement. This skeleton is then connected to the 3D model, allowing animators to move the character in realistic ways. Rigging can be quite complex, especially when dealing with characters that need to perform intricate actions. Dive deeper into rigging techniques.

Skinning: Once the rig is in place, the next step is skinning. This is where the character’s mesh (or skin) is attached to the rig. Proper skinning ensures that the mesh deforms correctly as the character moves. For instance, when a character bends an arm, the skin needs to stretch and compress naturally. Poor skinning can lead to unsightly distortions, breaking the illusion of life. Explore more about skinning.

The 12 Principles of Animation: Developed by Disney animators Frank Thomas and Ollie Johnston, the 12 Principles of Animation are the foundation of all great animation. They were initially devised for 2D animation but apply equally well to 3D. Here are a few key principles:

- Squash and Stretch: This principle gives a sense of weight and flexibility to objects. When animating a bouncing ball, it should squash when it hits the ground and stretch when it’s in the air, enhancing the sense of motion and impact.

- Anticipation: To make an action feel real, it needs a setup. For instance, before a character jumps, they need to crouch down. This principle helps prepare the audience for what’s about to happen, making the movement more believable.

- Staging: This is all about directing the audience’s attention to the most important part of the scene. Whether it’s through lighting, framing, or positioning, good staging ensures that viewers focus on the right elements.

Other principles include Follow Through and Overlapping Action, Arcs, Exaggeration, and Timing, all contributing to more lifelike and engaging animations. Learn all about the 12 Principles of Animation.

Essential Tools and Software

Exploring the landscape of 3D animation software reveals a variety of powerful tools, each catering to different needs and expertise levels. Understanding these options can help you make an informed decision for your project.

Autodesk Maya: As the industry standard for 3D animation, Autodesk Maya is favored by major studios for creating films, TV shows, and video games. Maya’s comprehensive toolset includes advanced character rigging systems and realistic fluid simulations, making it perfect for high-end productions. However, its complexity can be intimidating for newcomers. Discover more about Autodesk Maya.

Blender: A free and open-source alternative, Blender has gained popularity due to its robust features and active community. Blender provides tools for modeling, rigging, animation, simulation, rendering, compositing, and motion tracking. Its user-friendly interface and frequent updates make it accessible for both beginners and seasoned professionals. Additionally, the extensive library of plugins and community-created assets can significantly enhance your workflow. Learn more about Blender.

Cinema 4D: Developed by Maxon, Cinema 4D is renowned for its ease of use and intuitive interface, making it a favorite among motion graphics artists. It excels in creating stunning visual effects and integrates seamlessly with other design tools like Adobe After Effects. While it may not be as feature-rich in certain areas as Maya or Blender, its simplicity and powerful animation tools make it an excellent choice for designers and animators seeking a streamlined workflow. Explore Cinema 4D.

Houdini: Known for its procedural generation approach, Houdini by SideFX offers incredible flexibility and control over animations. This software is particularly favored in the VFX industry for creating complex simulations like smoke, fire, and fluids. Although Houdini’s node-based workflow can be challenging to master, it provides unparalleled power and versatility for advanced users. Get to know Houdini.

Both FMOD and Wwise offer seamless integration with major game development engines, including Unity and Unreal Engine, which are among the most widely used platforms in the industry.

FMOD: FMOD provides a robust integration with Unity, allowing designers to implement interactive audio directly within the engine. The FMOD Unity plugin makes it easy to link audio events with game events, creating a cohesive audio experience. FMOD’s Unreal Engine integration allows for advanced audio functionality within the Unreal Editor. Designers can use FMOD Studio to create complex audio events and control them through Unreal’s Blueprint system.

Wwise: Wwise’s Unity integration is similarly powerful, offering tools to manage audio assets and real-time audio behaviors. The Wwise Unity Integration (Wwise SDK) enables detailed control over audio settings and dynamic soundscapes. Wwise integrates tightly with Unreal Engine, offering features like spatial audio and real-time parameter control. The Wwise Unreal Integration (WAAPI) provides extensive customization options and supports advanced audio behaviors.

Creating a 3D Character: Step-by-Step Guide

Bringing a 3D character to life involves several intricate steps, each crucial to the overall process. Let’s walk through the essential stages, from initial concept to final rendering.

Conceptualization and Storyboarding

Every great character begins with a concept. Conceptualization involves brainstorming ideas, creating sketches, and defining the character’s personality, backstory, and role in the project. This stage often includes storyboarding, which helps visualize the character’s movements and interactions within different scenes. Storyboards serve as a blueprint, guiding the entire animation process.

Modeling

Modeling is the art of creating the character’s 3D shape. This step can be approached in two primary ways: polygonal modeling and sculpting.

- Polygonal Modeling: This technique involves creating a mesh of polygons to form the character’s basic shape. It’s like digital clay, where you start with a simple geometric form and refine it by adding more polygons for detail. This method is highly efficient for creating characters with a lot of angular features and detailed structures. Learn more about polygonal modeling.

- Sculpting: Sculpting allows for a more organic approach, resembling traditional clay sculpting. Digital sculpting tools like ZBrush let you manipulate a virtual piece of clay with brushes and tools to create intricate details and textures. This method is ideal for characters that require a high level of detail and realism. Discover digital sculpting.

Rigging and Skinning

Once the model is ready, it needs a skeleton to move. This is where rigging and skinning come into play.

- Creating the Skeleton: Rigging involves building a skeleton for the character, complete with bones and joints. This skeleton will drive the character’s movements. Each joint can be manipulated to pose the character in various ways. Explore rigging techniques.

- Attaching the Skin: Skinning is the process of attaching the character’s mesh to the rig. Proper skinning ensures that the mesh deforms naturally when the skeleton moves, preventing unnatural stretching or pinching. This step is crucial for realistic character movements. Understand skinning.

Animation

Animating the character involves defining how it moves and interacts with the environment. There are two main techniques: keyframe animation and motion capture.

- Keyframe Animation: In keyframe animation, the animator sets key poses for the character at specific points in time. The software then interpolates the frames between these key poses, creating smooth transitions. This method gives animators full control over the character’s movements. Dive into keyframe animation.

- Motion Capture: Motion capture, or mocap, involves recording the movements of a live actor and applying those movements to the 3D character. This technique captures realistic human motions and is widely used in film and video game production. Learn about motion capture.

Texturing and Shading

Texturing and shading add color, texture, and material properties to the character’s surface. Texturing involves applying images to the 3D model to create details like skin, clothing, and hair. Shading defines how the surfaces interact with light, giving them properties like glossiness, transparency, or roughness. Discover texturing and shading.

Lighting and Rendering

The final steps, lighting and rendering, bring the character to life in its virtual environment.

- Lighting: Proper lighting is essential to highlight the character’s features and set the mood of the scene. Different types of lights and lighting setups can dramatically affect the appearance and emotional tone of the animation. Explore lighting techniques.

- Rendering: Rendering is the process of generating the final image or animation from the 3D scene. This involves calculating light interactions, shadows, reflections, and textures to produce a photorealistic or stylized output. The rendering process can be time-consuming, but it’s where all the hard work comes together to create the final masterpiece. Understand rendering.

Advanced Techniques in 3D Animation

Mastering the basics of 3D character animation is just the beginning. To create truly compelling animations, animators need to delve into advanced techniques. These methods enhance realism and bring out the subtleties of a character’s performance. Let’s explore some of these advanced techniques.

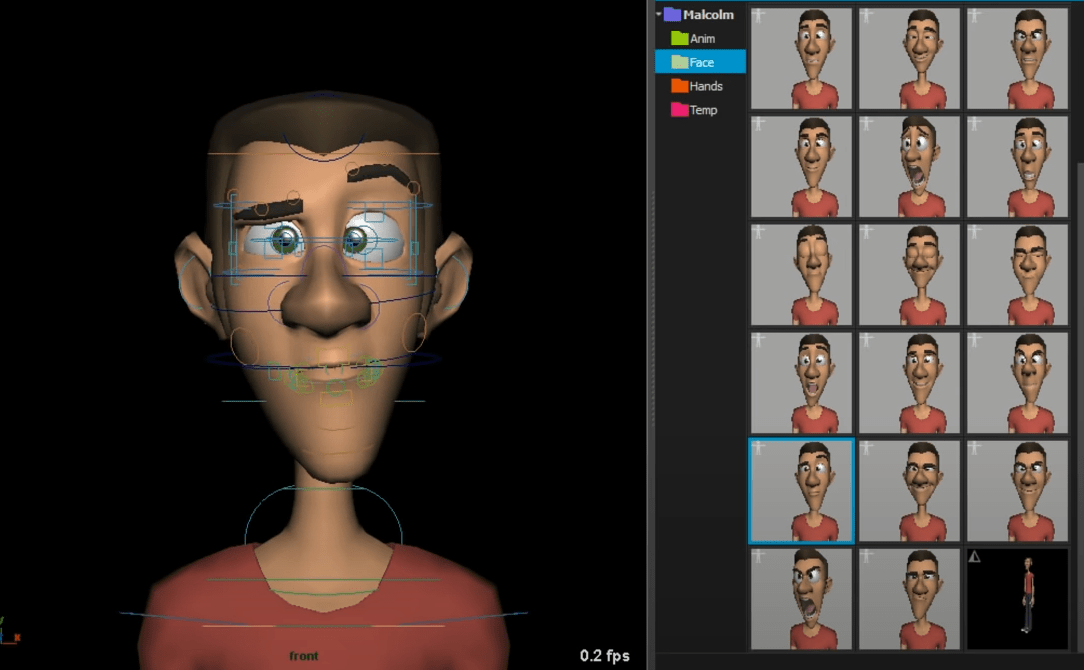

Facial Animation and Lip-Syncing

Facial animation is crucial for conveying emotions and expressions, making characters more relatable and believable. This technique involves animating the intricate movements of a character’s face, including the eyes, eyebrows, mouth, and cheeks. Lip-syncing adds another layer, matching mouth movements to spoken dialogue. Advanced tools and techniques, such as blend shapes and bone-based rigs, allow for detailed and nuanced facial animations. Learn more about facial animation.

Physics-Based Animation

Physics-based animation relies on the principles of physics to create realistic movements and interactions. This approach is often used for simulating natural phenomena and dynamic effects. Two key areas in physics-based animation are dynamics and particle systems.

- Dynamics: Dynamics simulate the physical properties of objects, such as gravity, collisions, and friction. This technique is essential for creating realistic interactions between characters and their environments. For instance, dynamics can be used to animate a character falling, pushing objects, or interacting with fluid surfaces. Explore dynamics in animation.

- Particle Systems: Particle systems are used to create effects like smoke, fire, rain, and explosions. By controlling the behavior of thousands of small particles, animators can simulate complex natural phenomena that react realistically to forces and collisions. Dive into particle systems.

Advanced Rigging Techniques

Advanced rigging techniques provide animators with more control and flexibility over character movements, enhancing the realism and expressiveness of animations.

- IK/FK Blending: Inverse Kinematics (IK) and Forward Kinematics (FK) are two approaches to animating joints. FK is useful for natural, flowing movements, while IK is ideal for precise control, like placing a hand on a surface. IK/FK blending allows animators to switch seamlessly between these methods, combining the best of both worlds. Learn about IK/FK blending.

- Deformation Rigging: Deformation rigging involves creating rigs that allow for complex, natural-looking deformations of a character’s mesh. Techniques like muscle simulations and corrective blend shapes ensure that the character’s skin moves convincingly over the underlying skeleton and muscles. Understand deformation rigging.

Integrating Motion Capture Data

Motion capture (mocap) data integration involves recording the movements of real actors and applying this data to 3D characters. This technique is invaluable for achieving realistic body mechanics and subtle acting nuances that would be challenging to animate by hand. By capturing detailed motion, animators can focus on refining the performance, enhancing expressiveness, and ensuring that the character’s movements are both natural and compelling. Discover motion capture integration.

Body Mechanics and Acting

Mastering body mechanics is essential for creating believable animations. This involves understanding how different parts of the body move in relation to each other, maintaining balance, and reacting to forces. Combining body mechanics with strong acting skills allows animators to convey a character’s personality and emotions effectively. Programs like Animation Mentor teach these skills through practical exercises and expert feedback, helping animators bring their characters to life with authenticity and depth.

Trends and Innovations

The world of 3D animation is constantly evolving, driven by technological advancements and creative innovations. These trends are shaping the future of animation, offering new possibilities for animators and storytellers alike.

Real-Time Animation and the Impact of Game Engines

Real-time animation, powered by game engines like Unreal Engine, is revolutionizing the way animations are created and experienced. Unlike traditional rendering, which can be time-consuming, real-time animation allows animators to see changes instantly, streamlining the workflow and enabling rapid iterations. This technology is not only transforming video game development but also making waves in film and TV production, where directors and animators can visualize scenes in real-time.

Real-time animation also facilitates interactive storytelling, where viewers can engage with and influence the narrative. This is particularly impactful in virtual reality (VR) and augmented reality (AR) applications, creating immersive experiences that were previously unimaginable.

AI and Machine Learning in Animation

Artificial Intelligence (AI) and machine learning are increasingly being integrated into the animation pipeline, automating repetitive tasks and enhancing creativity. AI algorithms can assist with various stages of animation, from generating initial sketches and models to animating complex movements.

Machine learning models can analyze vast amounts of motion capture data to create more realistic animations, predict character movements, and even generate new animations based on learned patterns. Tools like DeepMotion leverage AI to simplify the motion capture process, making high-quality animation accessible to a broader range of creators.

Virtual Reality and Immersive Experiences

Virtual reality (VR) and augmented reality (AR) are pushing the boundaries of what’s possible in 3D animation, offering entirely new ways to experience and interact with animated content. In VR, users can step into fully realized 3D worlds and interact with characters in a more immersive and engaging manner. This opens up new storytelling possibilities, where the audience is not just a passive viewer but an active participant in the narrative.

AR brings animated characters and objects into the real world, blending digital and physical experiences. This technology is being used in everything from mobile games and educational apps to marketing campaigns, providing new avenues for creativity and engagement. Explore VR and AR.

The Future of Animation in the Metaverse

The concept of the metaverse—a collective virtual shared space created by the convergence of virtually enhanced physical reality and physically persistent virtual reality—is gaining traction. In the metaverse, animation plays a crucial role in building interactive, immersive environments where users can socialize, work, play, and create.

In this emerging digital frontier, animators are not just creating characters and stories but entire worlds. Real-time rendering, AI-driven animation, and VR/AR technologies are converging to bring the metaverse to life, offering limitless opportunities for innovation. Companies like Meta (formerly Facebook) are investing heavily in developing the metaverse, highlighting the growing importance of 3D animation in this new era.