Introduction

Remember the catchy 8-bit tunes from your favorite childhood video games? Those simple melodies have evolved into complex, immersive soundscapes that can make or break a game. Game audio design, once limited to a few bleeps and bloops, is now a sophisticated art form that enhances the gaming experience. It involves creating everything from background scores to sound effects and voice acting. With advancements in technology, game audio designers now have the tools to create incredibly detailed and realistic audio environments.

Why is audio so crucial in games, you ask? Imagine playing a horror game without the eerie sound effects or a racing game without the roar of engines. Audio sets the mood, provides cues, and immerses players in the game world. It’s not just about what you see on the screen; it’s about what you hear. Great audio design can turn a good game into an unforgettable experience. Whether it’s the suspenseful music in Silent Hill, the iconic sound effects in Super Mario Bros, or the cinematic score in The Last of Us, audio plays a pivotal role in making games memorable.

The Early Days of Game Audio

Ah, the good old days of 8-bit and 16-bit games! This was a time when pixelated graphics ruled and audio was all about chiptunes. Back then, game audio was limited to just a few simultaneous sounds due to the hardware constraints of consoles like the NES and Sega Genesis. But despite these limitations, composers created some of the most memorable soundtracks in gaming history.

Early sound hardware was like a strict, no-nonsense parent—imposing, but it led to some creative solutions. Consoles had very limited memory and could only handle a handful of sound channels at a time. This meant composers had to be incredibly inventive with their melodies and sound effects. Each sound had to be meticulously crafted to make the most out of the available resources. Despite these constraints, the music was catchy, memorable, and had a charm that’s hard to replicate with today’s technology.

Even with these limitations, early game soundtracks became iconic. Who can forget the upbeat, infectious tunes of Super Mario Bros.? Or the adventurous and epic themes of The Legend of Zelda? These soundtracks weren’t just background noise—they were integral to the gaming experience. Other classics include the energetic beats of Sonic the Hedgehog, the intense and mysterious music of Metroid, and the pulse-pounding tracks of Mega Man. These tunes are so iconic that they’re still celebrated and remixed by fans and musicians today.

Technological Advancements in Game Audio

As technology advanced, so did the quality of game audio. Remember the transition from MIDI to CD-quality audio? It was like going from a tin can telephone to a state-of-the-art sound system. MIDI, or Musical Instrument Digital Interface, allowed for complex compositions but was limited by the sound hardware of the time. With the advent of CD-ROMs, games could finally incorporate CD-quality audio tracks, bringing richer, more immersive soundscapes. This shift allowed composers to use real instruments and high-fidelity recordings, transforming the auditory experience of games.

Digital sound effects were another game-changer. Early games relied on simple, synthesized sounds due to hardware constraints. But with the introduction of digital sound effects, designers could include realistic sounds like footsteps, gunfire, and environmental noises. This made games feel more lifelike and engaging. The eerie atmosphere in Resident Evil or the heart-pounding gunfights in Call of Duty owe much to the leap in sound effect technology.

Improved sound hardware opened up a world of possibilities for game audio design. The introduction of dedicated sound cards in PCs and advanced audio chips in consoles like the PlayStation and Xbox allowed for more complex and dynamic audio. These advancements enabled real-time audio processing, spatial audio effects, and adaptive soundtracks that could change based on the player’s actions. Games like Final Fantasy VII and Halo showcased how powerful sound hardware could enhance storytelling and gameplay, making the audio experience as memorable as the visuals.

The Role of Music in Game Audio

In the realm of video game sound design, music plays a pivotal role in shaping the player’s experience. Background scores are more than just a backdrop; they set the mood, enhance the atmosphere, and provide emotional depth to the game’s narrative. Imagine playing a thrilling action game without the adrenaline-pumping beats or exploring a mystical world without enchanting melodies. The right music can turn a simple game into an unforgettable adventure, immersing players in a unique environment and keeping them engaged for hours.

Composing music for video games involves a blend of creativity and technical expertise. Sound designers and composers often work closely with the game development team to ensure that the music complements the gameplay and narrative. Here are some key techniques used in game sound design:

- Interactive and Adaptive Music: This involves creating dynamic scores that change based on the player’s actions or the game’s environment. For example, the music might become more intense during a battle scene and mellow during exploration.

- Motifs and Leitmotifs: These are recurring musical themes associated with characters, locations, or events. They help in creating a cohesive soundscape and aid in storytelling. A character’s motif might change slightly to reflect their development throughout the game.

- Looping and Layering: Since players might spend varying amounts of time in different parts of a game, composers create music that can loop seamlessly. They also use layering to add complexity, allowing different musical elements to be introduced or removed based on in-game events.

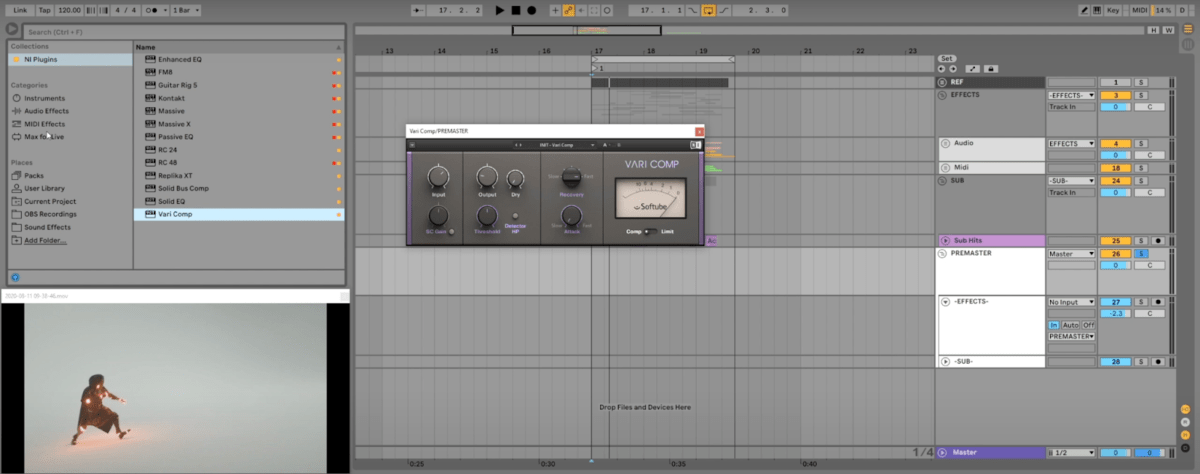

- Use of Real Instruments and Digital Audio Workstations (DAWs): While earlier video game sound design relied heavily on synthesized sounds, modern composers use a mix of real instruments and advanced DAWs to create rich, textured soundscapes.

The history of video game sound design is filled with memorable soundtracks that have left a lasting impact on players. Here are a few iconic examples:

- The Legend of Zelda: Ocarina of Time: This game is renowned for its use of music as a gameplay mechanic, with players using an in-game ocarina to solve puzzles and progress through the story. The soundtrack, composed by Koji Kondo, is a masterclass in creating an immersive and magical environment.

- Final Fantasy VII: Nobuo Uematsu’s score for this RPG is legendary, featuring themes that evoke a wide range of emotions. Tracks like “Aerith’s Theme” and “One-Winged Angel” are iconic in the realm of video game sound design.

- The Elder Scrolls V: Skyrim: Jeremy Soule’s epic score perfectly captures the vast and rugged landscape of Skyrim. The main theme, “Dragonborn,” is instantly recognizable and adds to the game’s grand sense of adventure.

- Undertale: Toby Fox’s soundtrack for this indie hit is a prime example of how unique and memorable music can enhance the player experience. Each character and area has its own distinctive theme, contributing to the game’s charm and emotional depth.

- Halo: The work of Martin O’Donnell and Michael Salvatori on the Halo series has become synonymous with epic sci-fi adventures. The Gregorian chant-like main theme is both haunting and heroic, perfectly setting the tone for the game’s universe.

These examples highlight how effective sound design and carefully crafted music can elevate a video game, creating a unique and immersive environment that resonates with players long after they’ve put down the controller. Learning from these masterpieces can inspire aspiring sound designers to create their own unforgettable game soundscapes.

Sound Effects: Bringing Games to Life

Sound effects are the unsung heroes of video game sound design. They breathe life into the virtual worlds, making every action feel real and every environment immersive. The process of designing and implementing sound effects involves creativity, technical skills, and an understanding of the game’s unique environment. Sound designers often start by brainstorming the types of sounds needed, from the clinking of armor to the rustling of leaves. They then either record these sounds or create them using digital audio workstations (DAWs). The final step is to integrate these sounds into the game, ensuring they trigger at the right moments and blend seamlessly with other audio elements.

Foley techniques, borrowed from the film industry, are crucial in video game sound design. Foley artists recreate everyday sound effects that enhance the auditory experience. For instance, the sound of footsteps, cloth rustling, or a sword being drawn are often recorded in a studio using various props. These sounds are then meticulously synced with the in-game actions. For video games, this means capturing the essence of the character’s movement and environment. Whether it’s the clattering of weapons in a medieval RPG or the creaking of floorboards in a haunted house, foley techniques help create a more realistic and immersive experience.

Environmental sounds are a vital component of creating an immersive game world. These background noises, like the chirping of birds, the distant rumble of thunder, or the hustle and bustle of a busy market, add depth to the game’s setting. Sound designers often spend hours in the field recording real-world sounds or layering multiple audio tracks to achieve the desired effect. In an open-world game like The Witcher 3, environmental sounds play a key role in making the game world feel alive and dynamic. Players can hear the wind rustling through trees, water flowing in rivers, and even subtle echoes in caves, all contributing to a rich auditory tapestry.

Sound effects, foley techniques, and environmental sounds are essential elements in the art of video game sound design. They not only enhance the realism of the game world but also engage players on a deeper sensory level, making every in-game action and setting feel vibrant and lifelike. By mastering these aspects, sound designers can create unforgettable gaming experiences that truly bring games to life.

Voice Acting in Games

Voice acting in video games has come a long way from the early days of text-based dialogues and simple grunts. Initially, technical limitations restricted the use of voiceovers, but as technology advanced, so did the quality and quantity of voice acting. The mid-90s saw a significant leap with games like Resident Evil, which, despite its famously cheesy dialogue, set the stage for future developments. By the 2000s, voice acting had become a standard, with games like Metal Gear Solid and Half-Life showcasing high-quality performances that added depth to the characters and stories.

Recording voiceovers for video games is a complex process that involves several techniques to ensure high-quality audio that fits seamlessly within the game. Here are a few key steps in the process:

- Script Preparation: Scripts are meticulously crafted, often requiring collaboration between writers and sound designers to ensure the dialogue flows naturally and fits the game’s context.

- Casting: Finding the right voice actors is crucial. The actors need to bring the characters to life with their vocal performances, matching the tone and personality envisioned by the game’s creators.

- Recording Sessions: Voice actors record their lines in a controlled studio environment, often performing multiple takes to capture the perfect delivery. Directors guide the actors to ensure their performances align with the game’s narrative and emotional beats.

- Editing and Processing: The recorded lines are then edited for clarity, consistency, and timing. Sound engineers may use effects like reverb, EQ, and compression to enhance the audio quality and ensure it blends well with other sound elements in the game.

- Integration: Finally, the voiceovers are integrated into the game using middleware like FMOD or Wwise, allowing for precise control over how and when the lines are triggered during gameplay.

In narrative-driven games, voice acting is not just an enhancement—it’s essential. Great voice acting can elevate a game’s storytelling, making characters more relatable and the plot more engaging. For example, the emotional depth of characters in games like The Last of Us owes much to the outstanding performances of its voice actors. Players form deeper connections with characters when they can hear their emotions, struggles, and triumphs conveyed through compelling voice work.

Voice acting also adds a layer of realism and immersion. In games like Mass Effect, players make choices that affect the story, and hearing the characters’ reactions makes these decisions feel impactful. Additionally, well-executed voice acting can enhance the atmosphere and tension in horror games, like the chilling performances in Outlast, making the experience more immersive and terrifying.

The evolution of voice acting in games, from basic lines to sophisticated performances, highlights its importance in modern video game sound design. Techniques for recording and integrating voiceovers have become more advanced, ensuring high-quality audio that enhances the gaming experience. In narrative-driven games, voice acting is crucial for building emotional connections and immersing players in the story, making it an indispensable element of game design.

The Rise of Adaptive Audio

Adaptive audio, also known as interactive audio, represents a significant leap in video game sound design. Unlike traditional linear soundtracks, adaptive audio changes in response to the player’s actions and the game environment, creating a more immersive and personalized experience. This approach allows the music and sound effects to dynamically adjust based on in-game events, making each player’s experience unique and engaging. Imagine the intensity of a battle scene with music that escalates as the fight progresses or the calming sounds of nature that shift as you explore different areas—this is the magic of adaptive audio.

Creating dynamic soundscapes involves several sophisticated techniques and tools. Here are some key methods used by sound designers:

- Real-Time Audio Processing: Utilizing software like FMOD or Wwise, sound designers can process audio in real time, allowing the game to alter sound effects and music dynamically based on gameplay. This enables a seamless transition between different audio states, such as shifting from exploration to combat.

- Layering and Stems: Music and sound effects are often composed in layers or stems, which can be independently controlled and mixed. For example, a music track might have separate layers for melody, harmony, and rhythm. These layers can be added or removed in real time to match the game’s intensity.

- Parameter-Based Audio Changes: Designers set up parameters that control how audio changes in response to game events. For instance, the game’s code might increase the tempo and volume of the music as the player’s health decreases, heightening the sense of urgency.

- Environmental Triggers: Sounds can be tied to specific in-game triggers, such as entering a new area, encountering enemies, or completing objectives. This ensures that the audio directly responds to the player’s interactions, enhancing immersion.

Several games have masterfully implemented adaptive audio, demonstrating its potential to transform the gaming experience. Here are some notable examples:

- The Legend of Zelda: Breath of the Wild: This game uses adaptive music to enhance its open-world exploration. The soundtrack subtly changes based on the player’s actions and location, creating a fluid and immersive audio experience that matches the game’s dynamic environment.

- Hellblade: Senua’s Sacrifice: Known for its innovative use of binaural audio, this game creates an intense and immersive experience by adapting its soundscape to reflect the protagonist’s mental state. The audio design plays a crucial role in conveying the character’s psychological journey.

- Red Dead Redemption 2: This game uses adaptive audio to bring its vast, living world to life. The music and ambient sounds adjust based on the player’s activities and surroundings, making the game world feel reactive and authentic.

- Journey: The soundtrack of Journey dynamically evolves as players progress through the game, creating an emotional and cohesive experience. The music seamlessly adapts to the player’s actions, enhancing the sense of discovery and connection.

- Dead Space: In this survival horror game, adaptive audio intensifies the sense of dread and suspense. The music and sound effects react to the player’s actions and the presence of threats, making each encounter feel more terrifying and immersive.

Adaptive audio is a powerful tool in video game sound design, offering a level of immersion and personalization that static soundtracks cannot achieve. By leveraging real-time processing, layering, parameter-based changes, and environmental triggers, sound designers create dynamic soundscapes that enhance the player’s connection to the game world. Games like Breath of the Wild, Hellblade, and Red Dead Redemption 2 showcase the incredible potential of adaptive audio, setting new standards for what players can expect in their auditory gaming experiences.

Tools and Software for Game Audio Design

When it comes to game audio design, a few tools stand out for their powerful capabilities and ease of use. Here’s a look at some of the most popular tools in the industry:

- FMOD: FMOD is a comprehensive sound engine used for designing interactive and adaptive audio. It’s known for its user-friendly interface and robust features, making it a favorite among sound designers. FMOD allows designers to create complex audio behaviors without needing extensive coding knowledge.

- Wwise: Wwise, short for Wave Works Interactive Sound Engine, is another top choice for game audio design. It offers a rich set of features for real-time audio processing, spatial audio, and interactive music. Wwise is highly regarded for its flexibility and integration capabilities.

- Audiokinetic: Audiokinetic is the company behind Wwise, and they provide a suite of tools and services for game audio professionals. Their ecosystem supports everything from sound design to implementation and testing.

- Pro Tools: While Pro Tools is more commonly associated with music production and film, it’s also widely used in game audio for recording, editing, and mixing. Its powerful editing capabilities make it a go-to for creating high-quality sound effects and voiceovers.

Both FMOD and Wwise offer seamless integration with major game development engines, including Unity and Unreal Engine, which are among the most widely used platforms in the industry.

- FMOD: FMOD provides a robust integration with Unity, allowing designers to implement interactive audio directly within the engine. The FMOD Unity plugin makes it easy to link audio events with game events, creating a cohesive audio experience. FMOD’s Unreal Engine integration allows for advanced audio functionality within the Unreal Editor. Designers can use FMOD Studio to create complex audio events and control them through Unreal’s Blueprint system.

- Wwise: Wwise’s Unity integration is similarly powerful, offering tools to manage audio assets and real-time audio behaviors. The Wwise Unity Integration (Wwise SDK) enables detailed control over audio settings and dynamic soundscapes. Wwise integrates tightly with Unreal Engine, offering features like spatial audio and real-time parameter control. The Wwise Unreal Integration (WAAPI) provides extensive customization options and supports advanced audio behaviors.